With ad costs soaring, Agamatic founder Ian Britton explains how generative video allows agencies to pre-visualize and validate concepts before committing resources.

Britton lays out a playbook where teams A/B/C test dozens of AI-generated outputs to identify a winning idea before applying human talent to produce the final version.

This pre-testing process is a powerful tool for mitigating reputational risk, allowing brands to privately gauge sentiment on creative angles or celebrity cameos.

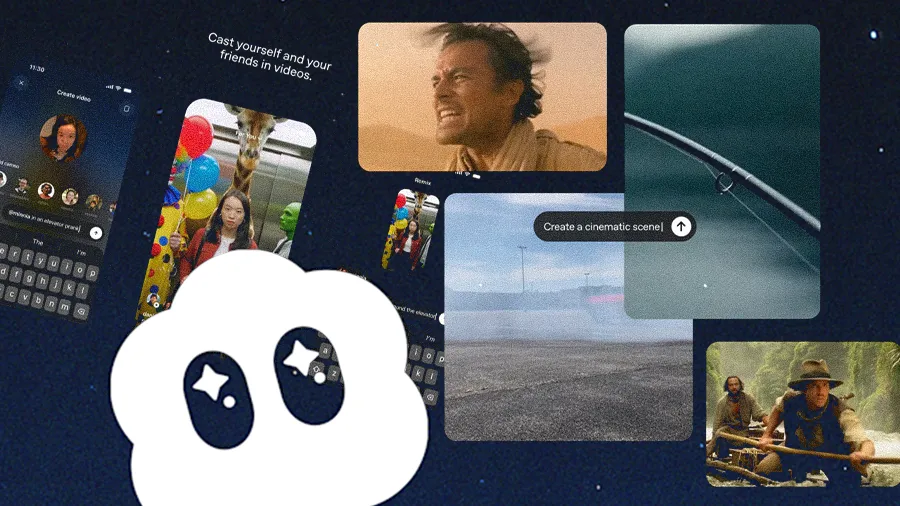

AI-generated video has turned pre-production into the most exciting part of the show. With tools like the newly released Sora 2, storyboards no longer sit still. Creative teams can bring scenes to life, test ideas in motion, and refine every beat before the cameras ever roll. That means fewer guesses, smarter risks, and a clearer path from concept to masterpiece.

Ian Britton, Founder of the AI and data consultancy Agamatic, has spent his career helping teams turn complexity into clarity. A former Bain consultant with a sharp eye for where data meets creativity, he’s now outlining what the next era of agency work will look like, and how rigorous testing will set the real innovators apart.

"There’s tremendous value in generating ten different videos and seeing which one lands. It's all going to be about testing. Not just A/B testing, but A/B/C/D/E/F/G testing the outputs, and then going back to the drawing board to recreate the winners with your own capabilities. That's what is going to give ads that extra oomph," Britton says. Especially in high-stakes moments like the Super Bowl, where a single ad slot can run $8 million, AI-powered pre-visualization gives agencies the freedom to test, refine, and validate concepts before production, turning every second of airtime into money well spent.

Crossing the finish line: "You can let AI run the laps, generate a dozen versions, and find the one that clicks. But that’s just the starting line," he explains. "The real work begins when you take that winning concept and rebuild it with your own craft, refining every detail until it feels unmistakably human." Britton believes the magic happens where data-driven discovery meets the creative precision only people can deliver.

Calling the shots: For him, the future of creativity belongs to those who can see the story clearly enough to lead both the humans and the machines that bring it to life. "You’re simply guiding the model in this process. You decide the tone, the rhythm, the emotion. There’s no such thing as an AI artist. Only AI directors."

That same pre-testing process also helps brands protect their reputations. With new AI tools that allow for realistic character insertion, teams can quietly test celebrity cameos or explore sensitive creative angles, gathering real audience feedback before a single frame goes public.

Megastar mockups: "If I wanted Ed Sheeran selling Heinz ketchup, I could generate 18 different versions of that idea using every creative prompt I can think of. I could test tone, setting, and story before Ed Sheeran ever steps into a studio. That ability to play with the concept first, to imagine and iterate on collaborations before committing, completely changes how you plan a campaign," says Britton. He sees this as the future of creative development, where brands can explore bold partnerships and refine their storytelling long before the real-world commitments begin.

The creative process is no longer a straight line from idea to execution. It’s a loop that rewards curiosity, experimentation, and the ability to connect tools in clever ways. As generative platforms evolve, success won’t belong to the teams with the biggest budgets but to those who understand how to orchestrate the technology with intention. The moat lies in knowing what to use, when to use it, and how to turn digital possibility into emotional impact.

"Staying up to date on what’s out there and how you can make these flows work together is what’s going to make you exceptional. Teams will stand out by effectively using and experimenting with the full toolbox of current AI models. I've seen success by generating a striking initial image in Midjourney, doing quick refinements in Gemini 2.5 Flash (Nano Banana), then bringing it to life in Sora 2," Britton shares. "Try it. But then also explore short-form prompts directly in Sora 2 to see what the model can creatively come up with on its own. The stack may evolve, but the advantage is the loop: prototype broadly, A/B test the heck out of the variants, and double down on what resonates," he concludes.